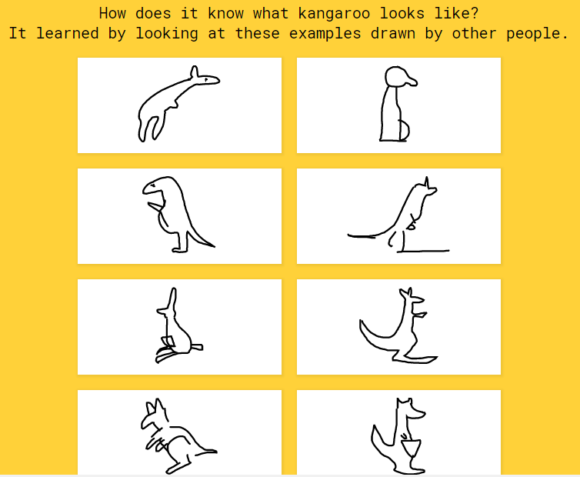

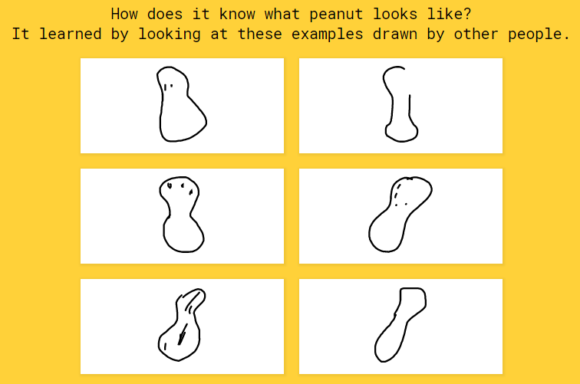

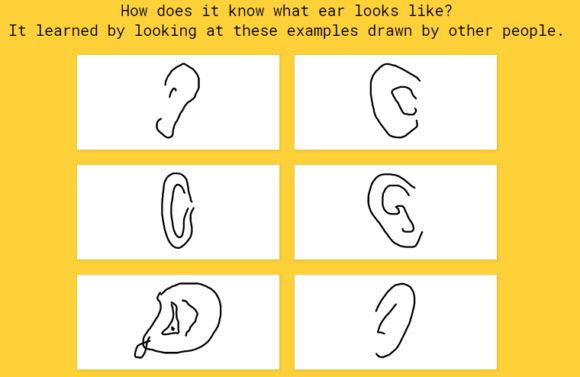

I focused on the website Quick Draw, which is a game from google where the user is given a word to draw in 20 seconds and an AI will try and guess what is being drawn. The user is providing drawings, which gives data to this AI so that it can become smarter and learn to recognize and distinguish different things from one another. It’s typical use to be played as a fun past time and the audience is likely anyone because it was used for data collection, but I think it was mostly used by younger people since it became popular through social media like Tumblr and YouTube.

Day 1: When I started on day 1, I just had the idea that I wanted to draw what I wasn’t being told to draw. My original idea was to see my art among all of the other drawings and if other people didn’t draw what they were supposed to or how long it would be before it got taken down. After my first round of drawing, I went through the data to try and find one of my drawings and I noticed that it wasn’t organized in any particular order, and the only dates I saw were in January or March of 2017 (which maybe was just coincidence with all the random ones I clicked but I clicked a LOT.) Knowing this, I assumed my drawings weren’t shown because either all of the data wasn’t shown or it was so randomly organized I never was able to find them, but I think that’s more unlikely since all of the months were only between January and March. Instead of observing my drawings in the data sets, I changed my plan to be to test the game and the AI’s intelligence by drawing what was asked in less traditional ways. I also tried to do my best and “win” by drawing what was asked, but I was never able to win since I drew differently than how the game is normally played. For each day I played the game about 10 times.

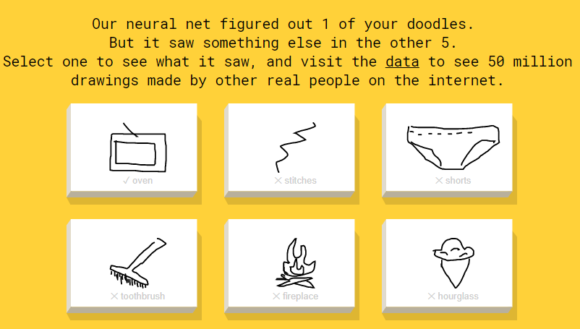

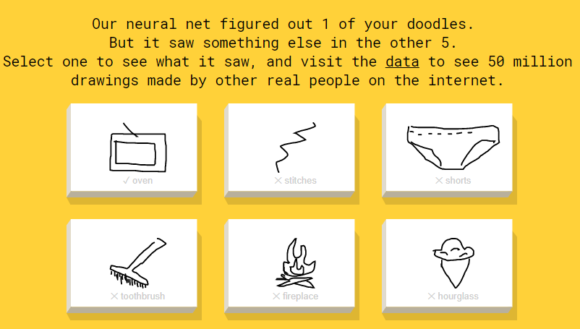

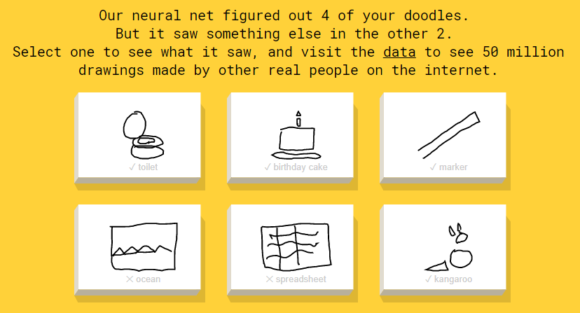

Day 1 Example: I didn’t know what I was doing so I drew cats at first since that was the example I gave in class. Halfway through, I thought I could draw cats vaguely shaped like what was being asked, as shown by the results above.

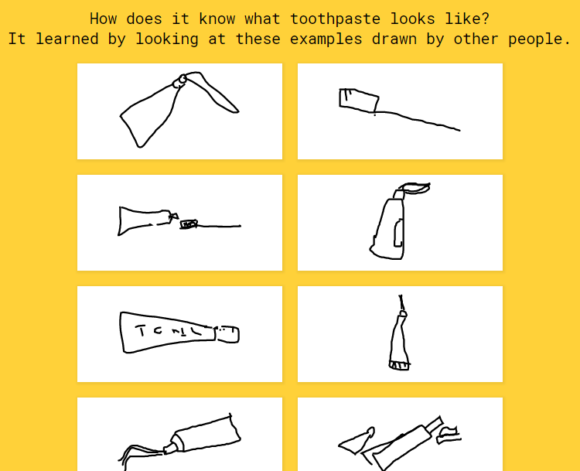

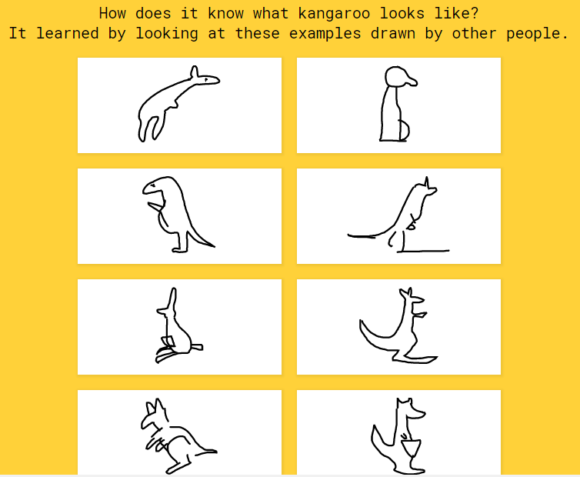

Day 2: I noticed when looking at the data sets that most drawings were drawn in one particular angle, so to test the AI’s knowledge I would draw everything upside down. Most of my drawings weren’t guessed, but when they were I noticed that the drawing could be reversible so they would make sense upside down. To do this, I started by drawing in a sketchbook to have reference and then transferred that to the computer so that it would be easier for me and more accurate for the game to guess.

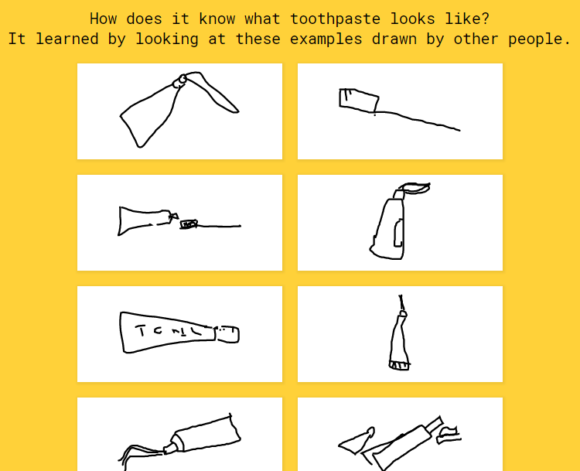

For example, the AI wasn’t able to guess snail, which to me looks pretty obvious. It was able to guess toothpaste since all of the drawings of toothpaste were drawn from all sorts of angles, and no angle would technically be considered upside down.

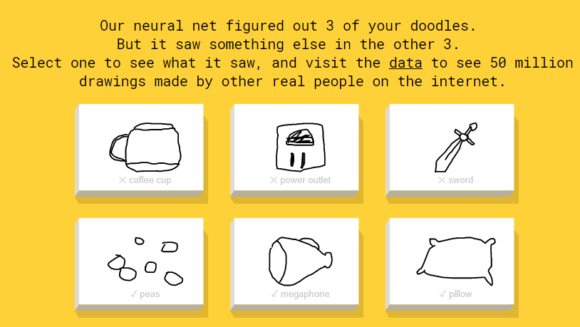

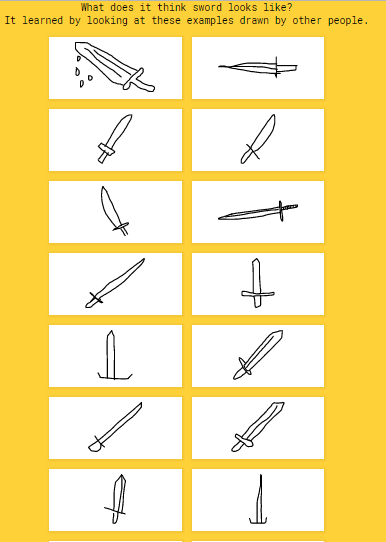

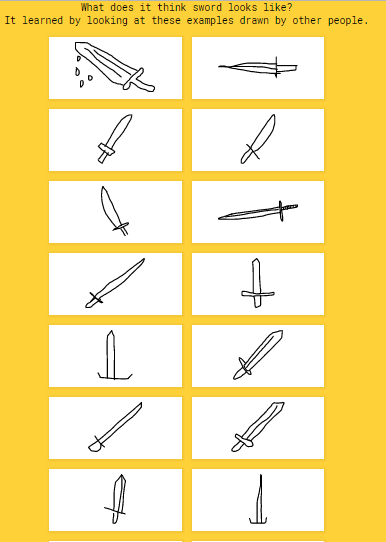

This one surprised me because I feel like a sword can be clearly distinguishable from all angles, but once again all of the data collected is drawn one way so this could lead my drawing to be taken out of the picture. Out of all the drawing tests I did on the program, doing them upside down was the most fun for me. None of my drawings are wrong because they’re upside down, so if it could be found in the data there isn’t a reason anyone should report it and it’s teaching the AI to see things in more ways than one (you know, maybe, or maybe not since I’m just one person so the drawings might not do much.)

Some problems that I think could stop this test from giving the best results are drawing ability, the times given, and how the program has a limited list of things to guess from which might make it guess the right thing because there’s nothing else left.

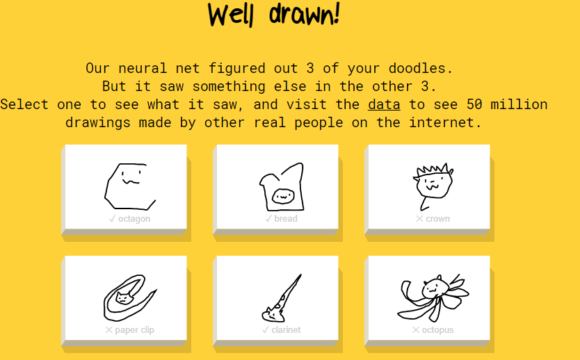

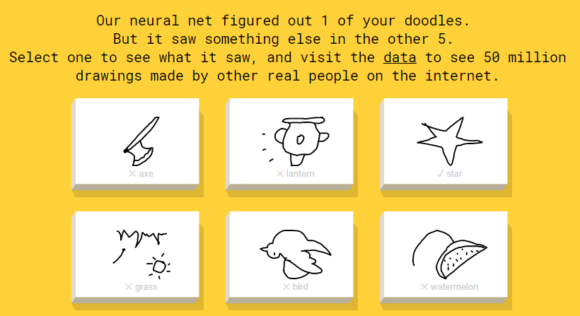

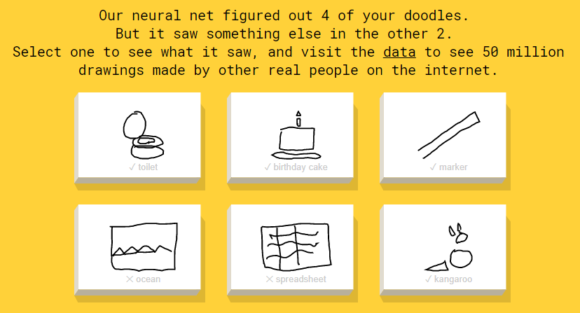

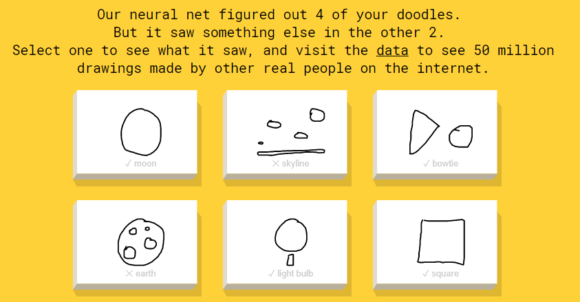

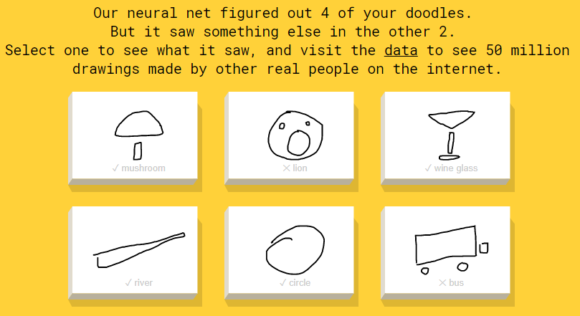

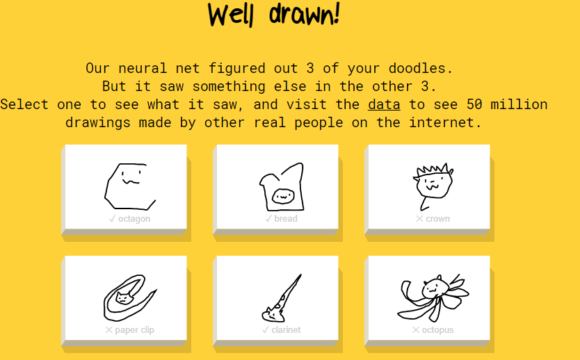

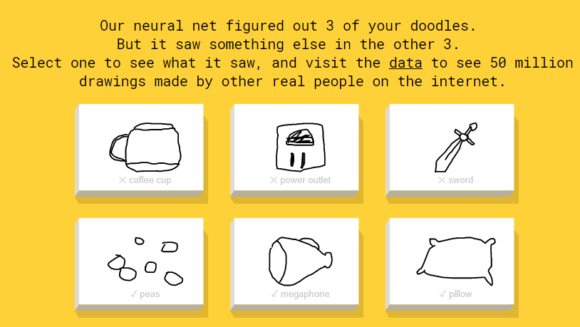

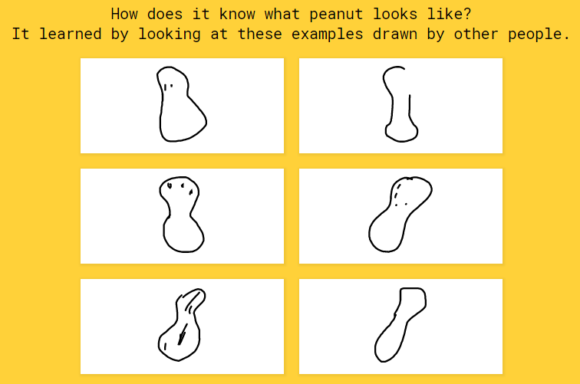

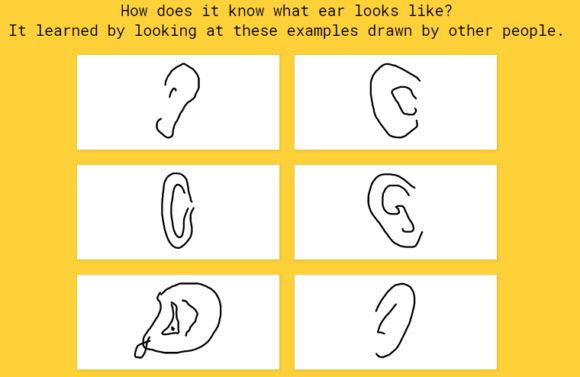

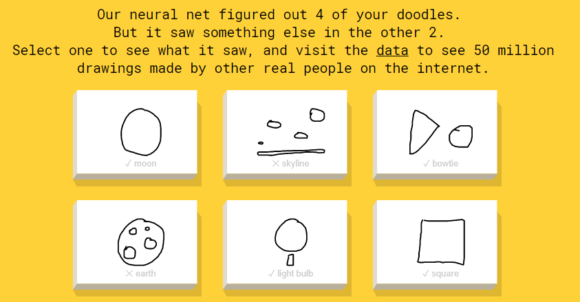

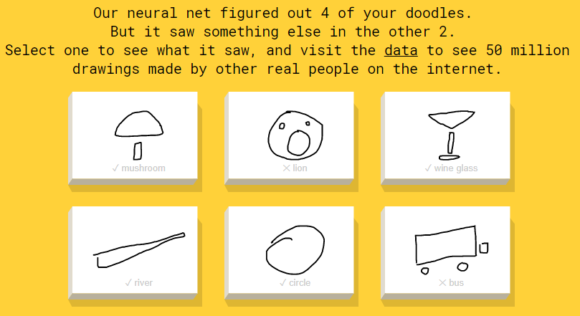

Day 3: For day 3 I did 2 different drawing tests. The first one was based on the fact that I noticed how little the program would need to guess certain things, so I wanted to draw the words in the most minimal way possible; by using only basic shapes. This was the closest I got to getting 6/6 right out of all of my attempts, which I think shows that the AI can recognize things based on position once again and simple structure. I think what ended up confusing the AI during this the most was the extra lines and spaces since I was trying to separate the shapes so what I was doing would come across more clearly, but it still was able to guess a lot right.

This was a good example to me of how little the AI needs to recognize things. I was really surprised at a lot of the drawings it got right, and I think looking at the data from these tests probably shows the most interesting results since it is so surprising in some cases.

It was also really funny to me because I ended up drawing really similar stuff a lot of the time because I wanted to keep it simple. The ones guessed right that I drew basically the same you can kinda tell where they were coming from, but they really don’t have that much in common at all.

Some problems with this test were that some of the words were shapes, so drawing that made it easier. I also found it hard to draw the most simple form of something and definitely think that I could have drawn too much which may have skewed the answer as well. Finally I also think that the limited list is a problem once again, and I wanted to explore that idea as well.

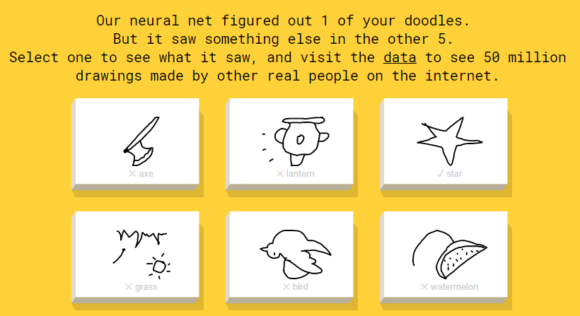

Extra test: I wanted to try and draw other words that appeared similar to the word being suggested. The game tells you what the AI thinks you are doing before it guesses correctly, so I wanted to try and take those original guesses and draw those instead. This test was the least interesting because I was drawing a different word completely than the one I was supposed to, so the AI always / almost always guessed wrong. It did however guess the word that I was drawing each time so it did know what I was actually drawing.